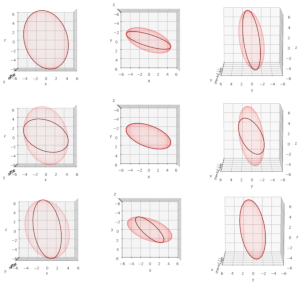

Orthogonal projections of multidimensional ellipsoids – II – the surface of the projection is a lower dimensional ellipsoid

In this post series we look at orthogonal projections of an ellipsoid in a n-dimensional (Euclidean) space onto a (n-1)-dimensional subspace. The ellipsoid is a closed surface in the ℝn and has a dimensionality of (n-1). Orthogonal projection means that the target subspace is orthogonal to a line of projection (defined by a vector) which is the same for all… Read More »Orthogonal projections of multidimensional ellipsoids – II – the surface of the projection is a lower dimensional ellipsoid