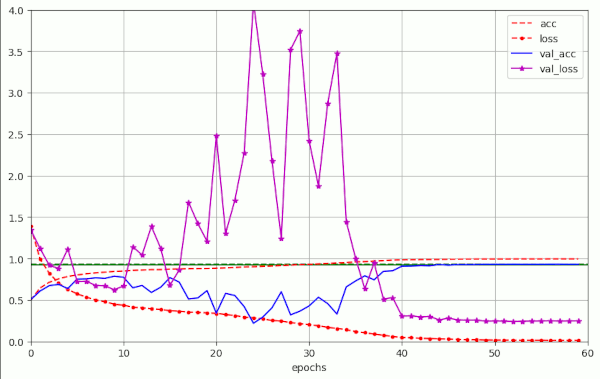

AdamW for a ResNet56v2 – V – weight decay and cosine shaped schedule of the learning rate

In this post series we try to find methods to reduce the number of epochs for the training of ResNets on image datasets. Our test case is CIFAR10. In this post we will test a modified cosine shaped schedule for a systematic and fast reduction of the learning rate LR. This supplements the approaches described in previous posts of this… Read More »AdamW for a ResNet56v2 – V – weight decay and cosine shaped schedule of the learning rate