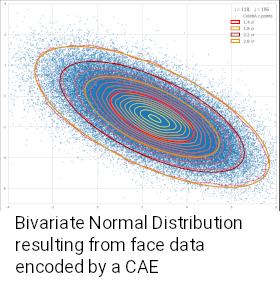

Bivariate Normal Distribution – derivation of the covariance and correlation by integration of the probability density

In a previous post of this blog we have derived the functional form of a bivariate normal distribution [BND] of a two 1-dimensional random variables X and Y). By rewriting the probability density function [pdf] in terms of vectors (x, y)T and a matrix Σ-1 we recognized that a coefficient appearing in a central exponential of the pdf could be… Read More »Bivariate Normal Distribution – derivation of the covariance and correlation by integration of the probability density