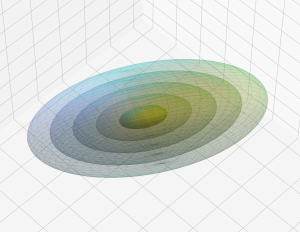

n-dimensional spheres and ellipsoids – III – Surface area of n-dimensional ellipsoid and its relation to MVN-statistics

In the 2nd post of this series we have derived an explicit formula for the volume of a n-dimensional ellipsoid (in an Euclidean space). One reason for the relatively simple derivation was that the determinant of the generating linear transformation could be taken in front of the volume integral. Unfortunately, an analogue sequence of steps is not possible for an… Read More »n-dimensional spheres and ellipsoids – III – Surface area of n-dimensional ellipsoid and its relation to MVN-statistics