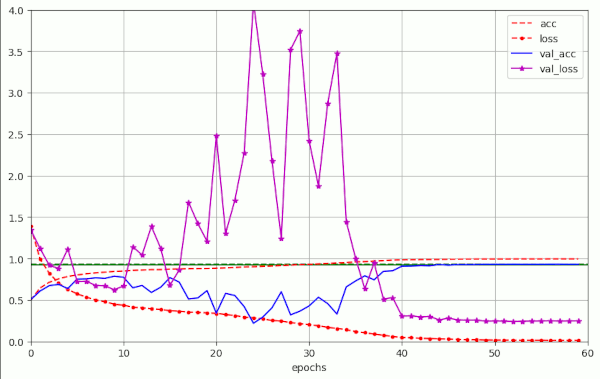

AdamW for a ResNet56v2 – IV – better accuracy and shorter training by pure weight decay and large scale fluctuations of the validation loss

Among other thins this post series is about efforts to reduce the number of training epochs for ResNets. We test our ideas with a ResNet applied to CIFAR10. So far we have tried out rather simple methods as modifying the schedule for the learning rate [LR]. In this post I describe experiments regarding a model using the AdamW optimizer, without… Read More »AdamW for a ResNet56v2 – IV – better accuracy and shorter training by pure weight decay and large scale fluctuations of the validation loss