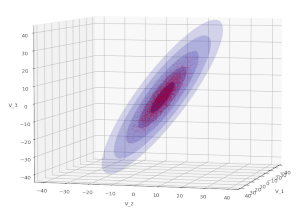

Bivariate normal distribution – derivation by linear transformation of a random vector of two independent Gaussians

In an another post on properties of a Bivariate Normal Distribution [BVD] I have motivated the form of its probability density function [pdf] by symmetry arguments and the underlying probability density functions of its marginals, namely 1-dimensional Gaussians. In this post we will derive the probability density function by following the line of argumentation for a general Multivariate Normal Distribution… Read More »Bivariate normal distribution – derivation by linear transformation of a random vector of two independent Gaussians