This post series is about mathematical aspects of so called “Multivariate Normal Distributions“. In respective literature two abbreviations are common: MNDs or MVNs. I will use both synonymously. To get an easy access, I want to introduce a MND as the result of a linear transformations applied to random vectors whose components can be described by independent 1-dimensional normal distributions.

Afterward I will discuss a more general definition of MNDs and derive their most important properties. As I am a physicist and not a mathematician, I will try not to become too formalistic. Readers with a mathematical education may apologize a lack of rigidity.

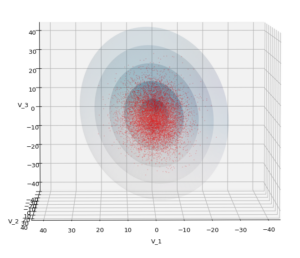

My perspective is that of a person who saw MNDs appear in different contexts of Machine Learning [ML]. Already the data samples used for the training of an ML algorithm may show properties which indicate an underlying MND. But also the analysis of data samples in the latent spaces of modern generative algorithms may reveal properties of MNDs. As we work with finite samples we have to apply numerical methods for the analysis for our data distributions and compare the results with theoretical predictions.

One helpful fact in this respect is that the probability densities of MNDs have contour hyper-surfaces defined by quadratic forms. We, therefore, have to deal with multidimensional ellipsoids and their 2-dimensional projections, namely ellipses. We have to cover these geometrical figures both with analytical and numerical methods.

The detection of MNDs and their analysis in a ML context can on the lowest level be done by investigating the variance-covariance matrix of multidimensional data distributions. We will have a look at methods for a geometrical reconstruction of MND contours with the help of coefficients of a respective matrix derived from available sample data by numerical methods. The theoretically predicted contour ellipses based on these coefficients can be compared with contours produced by a direct numerical interpolation of the sample’s vector data. I will also discuss the relation to PCA transformations and how to use them and their inverse for contour and vector reconstruction from selected data in two dimensional projections. Further mathematical methods, which check the MND compatibility of sample data more thoroughly, will be mentioned and discussed briefly.

In this first post I start our journey through the land of MNDs by describing a random vector composed of independent Gaussians. On our way I will briefly touch some basic topics as populations, samples, marginal distributions and probability densities in an intuitive and therefore incomplete way.

The posts in these articles require knowledge in Linear Algebra and a bit of vector analysis.

Basics: Samples of objects in Machine Learning and underlying populations

In Machine Learning we typically work with finite samples of discrete objects which have well defined properties or “features“.

When these features can be characterized by numerical values, the sample objects can mathematically be represented by points in the ℝn or corresponding position vectors. Such a position vector would reach out from the origin of a Cartesian coordinate system (spanning the ℝn) to a specific point representing a selected data object of our sample.

The coordinates of the data point or respective vector components are given by the feature values. This means that we deal with discrete distributions of data points and respective vectors in multi-dimensional Euclidean spaces.

Samples and statistics

We assume that the properties of the objects in an available ML sample follow some common underlying patterns (in the hope that an ML algorithm will detect such patterns during training). These properties would be encoded in the components of related vectors in the feature space. (And later on in the weights of a neural network trained on the sample’s object distribution in feature space …)

In what way does statistics enter this context?

The first point is that we hope that the concrete sample of vectors we use for the training of an ML-algorithm is representative for other samples of similar objects (and related vectors). We further assume that the object distribution in the feature space represents the “real” distribution of all possible objects across the common spectrum of features reasonably well. In other words:

We assume that the elements of our samples are taken via an unbiased statistical process from an underlying general population providing many more if not all possible objects of the same kind.

This is the reason why we think that the results of a ML training will reflect real patterns, typical for yet unseen samples, too.

Abstract population and the distribution of its elements in the feature space

The “population” is abstract with respect to multiple of its aspects:

- In most practical ML scenarios we have no direct access to it. We neither have a huge sample comprising all possible objects of the same kind or even infinite samples.

- Most samples in ML refer to concrete objects and not necessarily to abstract vectors in an Euclidean space like the ℝn we in the end work with.

- A “population” may also be the theoretical result of some kind of machinery or creation rules which at least in principle is able to produce all and very often a potentially infinite set of objects of the same kind. Take a genetic code as an example. However, in very many cases we do not even know the rules of this machinery when we start with a statistical analysis of the properties of one or multiple samples.

- If we have many samples and all show the same mean values of certain features and/or the same correlation of certain features we have reason to expect that some common underlying principle guided the creation of the objects in question.

Abstract space for an underlying population

Let us therefore assume that the elements of our population reside in abstract space Ω – which is accompanied

- by a kind of defined unbiased process ΨS for the “picking” of objects from it and

- by probabilities PΩ for picking certain types of objects, i.e. of objects which have certain specific properties, i.e. feature values, in common.

In many cases we have reasons to assume that the whole population shows a well defined object distribution with respect to certain properties. The distribution then could be used to define related probabilities PΩ. While we know rules behind such distributions in artificial, e.g. industrial object creation, it is sometimes not so easy to find and explain the origin of natural distributions. For an introduction to the origin of some elementary statistical distributions see here. Note that all statistical distributions allow for variations of feature values. In natural processes the variations correspond to fluctuations. The spectrum of such variations or fluctuations may follow some laws, e.g. guided by entropy maximization in nature.

Think about this twice – statistics enters the treatment of samples potentially on two levels:

(1) On a very basic level we have natural fluctuation processes accompanying the object creation of a population – and not all fluctuations around some mean values may occur on this level with the same frequency, but may follow some mathematical rules.

(2) The second level is that of creating samples from an underlying population. The statistical distribution of the sample objects will only reflect the object distribution in the full population if we follow an unbiased way of picking objects from the population. I.e. the picking process must not prefer certain objects of the population; the picking must occur blindly with respect to feature values. Each object must be “pickable” with the same probability from the population. By whatever means we guarantee this. Only then the statistical distribution of the finite sample across the feature space will approximately reveal the real distribution of population objects in feature space.

What analogies could provide visual examples of picking objects from a population? Think of little spherical balls representing our real objects, each ball with a defined color or other marks representing a certain set of properties. Now, we take all the spheres of the population, put them in a volume (like a cube) and mix them thoroughly. We sub-divide the cube virtually in many discrete, neighbouring sub-cubes. We then pick objects by guiding a picking arm to one of the sub-volumes with a probability equal for all volumes. I.e. we pick blindly with respect to properties, but with equal probability regarding the mixture. In between picks we may mix thoroughly again. (To guarantee equal picking with respect to defined sub-volume in a cube is sometimes more difficult than one might expect; see e.g. here.) In such a case the abstract space Ω would get a real representative counterpart.

Picking objects for a sample

We assume that the process which picks individual elements of the population and fills our sample is based on (blind) chance (with respect to object properties). If the sample is big enough it will thus approximate PΩ with respect to the distribution of population objects in feature space. I.e. we assume that our sample objects are picked without any bias regarding the properties of the objects of the underlying population.

What we in our case get is a mapping S from Ω to the ℝn :

As soon as we have such a map S and a description of a random picking process ΨS , we can repeat ΨS multiple times to create one or more samples of vectors. The production of different samples would then (due to the underlying probabilities PΩ) follow statistical laws:

For such proper statistical processes behind the sample creation we would assume that the probabilities derived from many and/or growing samples would approach the probabilities describing the (assumed) distribution of the objects in the underlying population (with potentially infinite members) in the feature space. The distributions of vectors in our samples with respect to certain properties of these vectors (and its components) should statistically approximate the properties of the underlying population and related probabilities PΩ. The more samples or the bigger samples we work with, the better the approximation.

How do we get and measure probabilities of the sample based distribution of vectors in the ℝn ? One option is that we sum up the number of vectors pointing into a certain discrete sub-volumes ΔV of the ℝn and set the results in relation to the number of all available vectors (and thus the covered part V of the ℝn). This gives us the probability PV of finding a vector with component values in ranges defined by the borders of ΔV.

For large samples (or averaging over many samples), we assume that the probability that S takes on a value s with an end-point in a certain measurable sub-space ΔV ∈ ℝn is defined by the probability to pick a respective element ω with the same property in the population space – namely to point into the discrete ΔV of the feature space. If we express the end-point lying in ΔV by a somewhat sloppy notation s ∈ ΔV, we arrive at :

Meaning: The probability of finding vectors pointing to a volume ΔV of the ℝn is defined by a probability PΩ of picking elements with fitting properties from the population.

Dense distributions of data points and vectors

The end points of vectors pointing to neighboring small volumes ΔV of the ℝn may fill the surrounding space relatively densely. The population may be based on a distribution of many objects with smoothly varying properties. Our map S will then lead to a dense distribution of points (and related vectors) in certain volumes of the ℝ or the ℝn.

By dividing the probability of finding an object in a small finite volumes ΔV by this volume we would get discrete values of a quantity we name a “probability density“. The probability density values would of course depend on the coordinates of the mid-point of ΔV . Such a process requires, by the way, that the target spaces of S functions should be measurable by virtues of some norm. In our case with the target space being the ℝn we, of course, use the standard Euclidean norm.

In many cases we may find profound indications from the analysis of large or many different ML samples – or from theories about our object population – that the individual points or vectors are distributed according to a certain well defined continuous “probability density function” [pdf]. This corresponds to a transition of a finite population to an infinite one with a dense object distribution and to a limit process for ever growing samples picked from such a distribution. Note that a continuous probability density in the ℝn requires three pre-requisites:

- A volume measure and a vector norm of the target space of S for object representation.

- S must cover certain volumes of the ℝn more and more densely by the endpoints of vectors for more and more objects added to continuously growing statistical samples.

- The number of points (end-points of vectors) per unit volume derived from the samples must vary relatively smoothly between neighboring volume elements and approach a continuous variation in the limit process to infinitely large samples and infinitesimal volume elements.

In case we believe that an available finite statistical sample of vectors indeed represents an underlying continuous vector distribution, we may want to approximate the latter by (multidimensional) numerical interpolations of the discrete numbers of points or vectors per volume element retrieved from of our samples. And compare the resulting curves for the probability density with theoretical curves of probability density functions based on the properties of the continuous distributions of specific vector populations.

In the following sections we want to define some very special vector distributions and their probability density functions in mathematical terms.

Random vectors and probability density functions

We take a multivariate random vector as a multidimensional “vector” S having 1-dim statistical random variables Sj as components.

A random variable Sj in our case is actually given by a function Sj: Ω→ℝ. Sj maps a set of objects taken from an underlying population to a statistical sample of real numbers. If applied multiple times Sj creates discrete distributions of points in ℝ. In a sense Sj therefore represents statistical sample distributions in ℝ.

A random vector S maps elements of Ω to vectors in the ℝn. It is a vector valued function. It indirectly represents resulting statistical distributions of vectors in (potentially infinite) samples of (position) vectors defined on the ℝn and respective distributions of data points. For a finite sample of vectors we can in principle index all the comprised vectors and list them up:

T symbolizes the transpose operation.

Correlated components of the random vector: Note that the components of vectors sk of a specific set may not be independent of each other. Meaning: For some sets and populations the components of the comprised vectors in the ℝn do not vary individually (aside of statistical fluctuations):

If we chose a component value skj, then the value ski of another component may have to fulfill certain conditions depending on the values of the first and/or other components. In other words: The individual distributions represented by the various Sj can e.g. be (pairwise)

- independent or dependent of each other,

- they can be uncorrelated or correlated.

This holds for finite sets with discrete vectors or potentially infinite sets of vectors whose endpoints cover a sub-volume of the ℝn densely. For a distinction of (un-) correlation from (in-) dependence see below.

Continuous distributions and probability densities in one dimension

A concrete sample vector sk ∈ ℝn of a vector distribution can be described by specific values sjk assumed by variables sj, which we use below to flexibly describe vector components. The sj determine the coordinates of respective data points. In the discrete case sj can only take on certain well defined values in ℝ (see above). For discrete distributions a concrete value sjk is assumed with a certain probability P(sjk). The factual probability given by a sample depends of course on the number of those objects in a set that reproduce sj and on the total number of samples. If such a definition is too fine grained we may use probabilities defined on intervals a ≤ sj ≤ b.

In case of an infinite population with a dense distribution of objects with smoothly varying properties, we can approach a continuous distribution of points in target regions of ℝ by creating larger and larger samples. In such a kind of limit process Sj may map properties of the objects in Ω onto a dense distribution of points in ℝ having a continuous 1-dimensional probability density – in the sense that the number of points in neighboring small intervals varies continuously.

Now let us look at a dense population with a distribution of objects with smoothly varying properties – and at extremely huge or infinite sets derived from it. If sj in a limit process can take all values in ℝ and cover selected finite intervals [a, b] of ℝ densely we may define a continuous probability density pj(sj) for infinitely small intervals within the finite intervals such that the probability for sj to assume values in an interval [a, b] is given by

pj(sj) characterizes an 1-dimensional distribution of dense a population represented by Sj. In some interesting cases the map of such a distribution and derived potentially infinite samples may cover large parts of ℝ or all of ℝ densely.

When we have a large sample of M objects with a number ΔMa,b being located in [a, b], and other objects in e.g. neighboring intervals, then we would assume:

Probability density of a random vector distribution

By making a limit transition to infinitely small volumes in the ℝn , we can extend the idea of a probability density to a random vector S representing some object population with the ℝn as feature space. The more elements we get in a statistical sample of vectors (based on picking from the population) the more will the difference in the numbers of vectors pointing into neighboring small volumes of equal size be described by differences of the population’s probability density given at the volumes’ centers.

In a limit process for an infinite population the endpoints of the sample’s position vectors would fill the ℝn (or a defined sub-space V of it; V ∈ ℝn ) more and more densely. In the end we would get an infinitely dense distribution in the sense that every infinitesimal volume dV in the covered region V would have vectors pointing to it. For huge samples with N elements we could use fine-grained, but finite volume-elements ΔV and count the number of vectors ΔnV pointing to each of these volume elements to define discrete data points gΔV = ΔnV/N/ΔV for an approximation of a continuous density function via interpolation.

Note that a precise definition of a “dense” vector population would require a measure for the distance between vectors a and b. We can formally achieve this by defining a norm for the difference vector ||b–a||. But I think it is clear what we mean: Two position vectors are close neighbors if and when their endpoints are close neighbors in the ℝn in the sense that the distance between these points becomes very small or negligible.

If each of the components sj of the vectors s can take all values in ℝ we may describe the probability that the population represented by S contains vectors s pointing to a volume element ΔV by a continuous multidimensional probability density function [pdf] for infinitesimal volume elements dVS = ds1 ds2 … dsn.

Let us call the pdf for a continuous random vector pS (s1,s2, …) and define the probability P of finding or creating vectors pointing into a finite volume:

Note that pS(): ℝn→ℝ is a continuous function mapping vectors via their components to real values pS ∈ ℝ. For pS to play its role as a probability density it must fulfill a normalization condition for the covered space V in the ℝn (which in some cases may be the infinite ℝn itself):

In terms of finite discrete distributions: Summing up the number of vectors pointing to discrete volume elements over all (hit) volume elements in the ℝn must give us the total number of all available vectors.

Note that because pS maps the ℝn to ℝ, a continuous pS thereby defines a hyper-surface in some ℝn + 1, and a constant pS = const defines a hyper-surface in the ℝn.

Dependencies and correlations of the components of a random vector

An interesting question is whether and, if so, how pS(s) depends on the 1-dimensional probability density functions pj(sj) or on the parameters [params] of these density functions:

The brackets [ ] denote a very complex relation in the general case; a direct analytical decomposition of pS(s) into the pj(sj) may in general not be possible or only after some sophisticated transformations.

For MNDs, however, we will be able to show how the parameters describing the densities pj(sj) constitute the functional form of pS(s). And we will find transformations which allow for a functional decomposition.

Let us reverse our line of thought and assume that we have a given probability density function pS(s) for a vector distribution (or derived such a distribution by numerical interpolations between elements of a discrete set of sample vectors). Then we may be interested in the 1-dimensional densities of constituting random variables. We introduce the probability density of a “marginal distribution” as

I.e., we integrate pS(s) completely over n-1 coordinate directions of the ℝn, but leave out the 1-dimensional ℝ-subspace for sj. Note that the integral covers all dependencies of the components of the vectors in the set we work with.

Note also that evaluating the integral does not mean that we can decompose pS(s) easily into some analytical relation between the pj(sj). But for some special distributions we should at least be able to provide a relation of pj(sj) to pS(s) mediated by the conditional probability density for the other component values p|sj() in the logical sense of a factorization of probabilities

Random vector composed of independent univariate normal distributions

Let us leave all the subtleties involved in the statistical mappings mediated by S onto potentially continuous data point distributions in the ℝn. We achieve this by discussing concrete ad well defined probability density functions.

Let us focus on a bunch of n independent 1-dimensional normal distributions with probability densities gj(wj). (Knowing that we derive the distributions via n mapping functions Wj ). For a 1-dimensional normal distribution the probability density is given by a Gaussian

The μj is the mean value and σj is the square root of the variance of the Wj distribution. We use the following notation to express that the result of Wj belongs to the set of 1-dimensional normal distributions:

Regarding the parameters: \( \mathcal{N}\left(\mu_j, \sigma_j^2 \right) \) denotes a 1-dimensional normal distribution with a mean value \(\mu_j\) and a variance \(\sigma_j^2 \).

Now, let us use a bunch of n distributions Wj to compose a random vector W with n components

Due to the assumed independence of the 1-dimensional components Wj, the resulting probability density function gw(w) is just the product of the pdfs for the individual components:

Σw symbolizes a diagonal matrix with all the σj-values on the diagonal and otherwise 0 (see the next section for the appearance of this matrix). μw is a vector comprising all the μj-elements. We get a compact expression for gw(w):

For reasons that will become clear in the next post, we formally rewrite this function with the help of a vector notation to become:

The bullets in the exponent mark matrix multiplications (in the sense of Linear Algebra). The inverse of Σw is diagonal, too, and has the reciprocates 1/σj2 as coefficients.

It is easy to prove by proper integration of gw(w) that the marginal distributions Wj of W are just described by the probability densities gj(wj). It is also simple to show that the probability density gw(w) fulfills the required normalization condition

We introduce the notation of a multivariate normal distribution of independent Gaussians in the ℝn as a distribution with a pdf gw(w) and thus having two parameters given by a mean vector μw and a diagonal matrix Σw as

Σw is also called the variance-covariance matrix or shorter covariance matrix of a MND. See below.

Standardized multivariate normal distributions composed of independent 1-dimensional Gaussians

Let us now move our Cartesian coordinate system of our ℝn such that we have

Let us further scale the individual coordinates by

Then we get a centered and standardized normal vector distribution [SNVD] of independent Gaussians :

\( \pmb{\mathcal{N}} \left( \pmb{0}, \, \pmb{\operatorname{I}} \right) \) denotes a random normal vector with a mean vector \(\pmb{\mu} = \pmb{0} \) and an (nxn) identity matrix \( \pmb{\operatorname{I}} \) as its covariance matrix (see the next section). Its probability density has a simpler form, namely

The exponent includes a scalar product between the vectors. In the future we will not write an extra dot between the horizontal and the vertical vectors. Two matrices aside each other symbolize a matrix multiplication in the sense of Linear Algebra – if the number of columns and rows fit.

Why did the leading factor get so simple? Well, the density function re-scales with the coordinates because on the infinitesimal scale we must fulfill:

Because of dzj = (1/σj) dwj , the new volume element dVz in Z-space becomes

The attentive reader recognizes the Jacobi determinant of the coordinate transformation. It eliminates one of the factors in the denominator of the leading term of gw(w) during the transition to gz(z).

For Z we obviously have:

It is easy to show that the pdf of Z remains normalized:

Expectation value and covariance of random vectors

We want to understand the role of the matrix Σw a bit better. Actually, this matrix is closely related to a formal extension of the “covariance” of two 1-dimensional random distributions to random vector distributions. But let us start with the expectation value of the random vector first.

The expectation vector of a random vector is just the vector composed of the expectation values of its (marginal) component distributions:

The covariance of a random vector results in a natural way by a generalization of the covariance of two 1-dim distributions X and Y:

A multidimensional generalization has to take into account all possible combinations (Sk, Sj). This indicates already that we need a matrix. A simple formal way to get expectation values of all pairwise combinations of components from a random vector S is to use a matrix product between ST and S. This leads almost naturally to a matrix of expectation values:

Note the order of transposition in the definition of Cov! A (vertical) vector is combined with a transposed (horizontal) vector. The rules of a matrix-multiplication then give you a matrix as the result! The expectation value has to be determined for every element of the matrix. Thus, the interpretation of the notation given above is:

Pick all pairwise combinations (Sj, Sk) of the component distributions. Calculate the covariance of the pair cov(Sj, Sk) and put it at the (j,k)-place inside the matrix.

Meaning:

The above matrix is the (variance-) covariance matrix of S, which we also abbreviate with ΣS.

As Wikipedia tells you, some people call it a bit different. I refer to it later on just as the covariance matrix (of a random vector). Because the covariance of two independent distributions X and Y is zero

we directly find

We have found an interpretation of the matrix appearing in the probability density gw(w) of \(\pmb{W} \,\sim\, \pmb{\mathcal{N}}_n \left(\pmb{\mu}_{\small W},\, \pmb{\Sigma}_{\small W} \right) \):

The matrix Σw-1 appearing in the exponential function of gw(w) for a normal random vector with independent Gaussians as components is the inverse of the variance-covariance matrix Σw = Cov(W).

We expect that correlations between the 1-dimensional component distributions will leave their fingerprints in the coefficients of these matrices.

An open question: The attentive reader may now pose the following question: The matrix Σw = Cov(W) may be well defined for a general random vector. But how can we be sure that the inverse matrix always exists? This is a very good question – and we will have to deal with it in forthcoming posts on MNDs.

Correlation and independence

Equipped with the above definitions we can now better distinguish the independence of individual distributions on each other from their un-correlation. Independence means that the probability density function of a random vector factorizes into a product of the probability density functions for the component distributions.

Correlation instead refers to the covariance in the sense that some coefficients off the diagonal of the covariance matrix are not zero:

Expectation value and covariance of standardized normal random vectors with independent components

For our centered and standardized normal distribution Z we find:

Conclusion and outlook

Objects with many quantifiable features can be described by vectors. Multivariate random vectors extend the idea of 1-dimensional, i.e. univariate random variables into the ℝn. A random vector represents a map of an object population as well as derived statistical samples and related statistical probabilities for the appearance of vectors with certain properties.

Samples are created from a population by the repeated application of a defined process of statistically picking individual elements. By accounting vectors with certain properties we get to probabilities. A random vector thus describes a probabilistic distribution of vectors and related data points. The component values of these vectors follow univariate probability distributions. The probabilities of the univariate distributions can be understood as marginal probabilities of the random vector.

A limit transition from finite sets of sample vectors to huge or even infinite sets fed from an underlying continuous population may lead to distributions of (position) vectors whose endpoints densely fill the ℝn. A limit process allows for the definition of a probability density function for random vector distributions. The probability density functions describing the distribution of vector endpoints in the ℝn can only in simple cases be decomposed into a simple combination of the 1-dimensional pdfs for the components. In general the marginal distributions may not be independent of each other or uncorrelated.

By using the expectation values of marginal distributions we can define mean vectors for such vector distributions in a straightforward way. The quantity corresponding to the variance of univariate distributions is for a random vector distribution becomes a matrix – the variance-covariance matrix.

When the random vector is derived from independent univariate normal distributions the covariance matrix becomes diagonal and the probability density of the vector distribution is given by a product of the univariate Gaussians for the marginal distributions. The probability density of a multivariate normal distribution Z composed of independent and standardized marginal Gaussians gets a particularly simple form and the covariance matrix reduces to a (nxn) identity matrix.

In the next post of this series

we will apply a linear transformation to our special distribution Z. This will be done by applying a matrix A to the z-vectors. We will try to find out how this impacts the probability density of the transformed distribution.