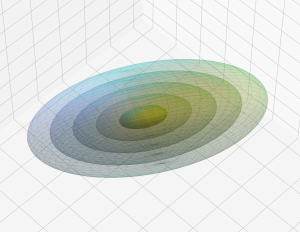

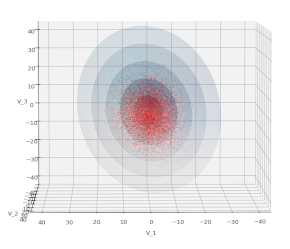

Multivariate Normal Distributions – III – Variance-Covariance Matrix and a distance measure for vectors of non-degenerate distributions

In previous posts of this series I have motivated the functional form of the probability density of a so called “non-degenerate Multivariate Normal Distribution“. In this post we will have a closer look at the matrix Σ that controls the probability density function [pdf] of such a distribution. We will show that it actually is the covariance matrix of the… Read More »Multivariate Normal Distributions – III – Variance-Covariance Matrix and a distance measure for vectors of non-degenerate distributions