In previous posts of this series I have motivated the functional form of the probability density of a so called “non-degenerate Multivariate Normal Distribution“.

- Multivariate Normal Distributions – II – Linear transformation of a random vector with independent standardized normal components

- Multivariate Normal Distributions – I – Basics and a random vector of independent Gaussians

In this post we will have a closer look at the matrix Σ that controls the probability density function [pdf] of such a distribution. We will show that it actually is the covariance matrix of the vector distribution. Due to its properties the inverse of Σ can be used to define a special measure for the distance of the distribution’s vectors from their mean vector. Setting this distance to a constant value defines a contour surface of the probability density and gives rise to quadratic forms describing surfaces of multidimensional ellipsoids.

While we dive deeper into mathematical properties we should not forget that our efforts have the goal to analyze real vector distributions appearing in Machine Learning processes. Numerically evaluated hyper-contours which are close to multidimensional ellipsoids would be one of multiple indicators of an underlying multidimensional normal distribution. We will also dare a first look into the topic of degenerate normal distributions.

We abbreviate the expression “Multivariate Normal Distribution” by either MND or, synonymously MVN. Both abbreviations appear in the literature. We refer to the “variance-covariance matrix” of a random vector just as its “covariance matrix”.

Probability density function and normalization

Our idea of a random vector YN giving a non-degenerate MND is based on the application of an invertible linear transformation onto a random vector Z representing a continuous distribution of vectors z whose component values follow independent and standardized 1-dimensional normal distributions :

For details of Z see post I. My is some (n x n)-matrix representing the transformation. Remember that the z-vector distribution had a pdf

with contour hyper-surfaces being n-dimensional spheres. An evaluation of the transformation gave us a pdf gy(y) for the distribution of the transformed vectors y = My z ( with y, z ∈ ℝn) :

ΣY is a symmetric and invertible matrix defined by

The factor in front is just a normalization factor (coming, of course, from Z). By our MND-construction we actually have found the useful formula :

You can prove this by using the Jacobi determinant of the transformation. In the sense of post I YN is mapping objects onto a continuous distribution of vectors y ∈ ℝn being connected to vectors z given by another random vector Z. Note that we describe the vectors and their components in an Euclidean Coordinate System [ECS] spanning the ℝn .

Getting the “variance-covariance matrix” from the probability density

In post I of this series we defined the “variance-covariance matrix” of a random vector and the related vector distribution. An application gives us :

So, actually our symmetric Matrix ΣY = My • MyT is nothing else than the variance-covariance matrix of our random vector YN .

Note that symmetry does in no way imply a diagonality of the matrix. On the contrary: For a general My the resulting off-diagonal elements of ΣY will assume non-zero values. In contrast to the previously discussed normal distributions W and Z with independent components, this indicates that we will in general find a correlation between the components of the transformed random vector YN. We will later see that this has a geometrical interpretation

Positive-definite covariance matrix?

A symmetric matrix is invertible if it is positive-definite. Can we find out about this? By definition for a real valued matrix like ΣY we request for any vector y ∈ ℝn other then the zero vector 0 :

Replacing ΣY by the automorphism My and MyT we get:

For a real valued invertible (n x n)-matrix MyT the equal sign comes about only for the trivial case y = 0. So, we have a positive definite matrix. Note that we could have also derived this from back-transforming y to a z vector by using the inverse of My and by evaluation of the matrix products to become the identity matrix.

Actually, Linear Algebra teaches us that any real-valued symmetric matrix A is positive definite if there exists a real non-singular matrix M such that A = M • MT ( see e.g. [1]). And: A positive semi-definite and invertible matrix is positive-definite! So, we could have directly concluded it from our definitions. But note that the derivation above holds also for more general cases of M.

Consistency check, just for completeness: If M is invertible, so is MT and, therefore, MT • x = 0 only has the trivial solution x = 0. Thus: With an invertible M, the matrix Σ = MMT becomes symmetric (by construction!) and positive-definite – and thus invertible! In addition this is consistent with the fact that a quadratic M with full rank (n!) is invertible. But if M has full rank, then MT has, too, and also the matrix product MMT! The latter follows from

and setting B = AT. See e.g. [2] .

The transpose and the inverse of the covariance matrix ΣY are positive definite, too

It is relatively easy to show that the inverse of a positive-definite matrix A is positive-definite, too. First we can show that AT is positive definite. Because the matrix product (xT A x) is a scalar we have:

and we get positive values as for A itself. Now, let us take a vector y = Ax. Then

So, we know for our special case of non-degenerate normal distributions that ΣY-1 is both symmetric and positive-definite.

The “Mahalanobis distance” dN of a non-degenerate multivariate normal vector distribution

For a 1-dimensional Gaussian distribution

we can interpret the square root of the term in the exponent as a distance dx of x from the mean value μx measured in units of the standard variation σx.

What about the exponent

appearing in the probability density function gy(y) for our continuous vector distribution Y? To be able to define a “distance” in the sense of

we must obviously require that the expression under the square root is a positive real number, i.e. ΣY-1 must be a positive-definite matrix. For our case of non-degenerate normal distributions Y we know that this is the case. So, the exponent in the probability density function for a non-degenerate MVN gives us really a special measure d (y, μy) of the distance of a vector y of a non-degenerate normal distribution YN from its average vector μy.

This distance is called the “Mahalanobis distance” of a vector bing a member of a non-degenerate MVN.

What does a constant Mahalanobis distance mean?

The probability density of a non-degenerate MVN obviously assumes constant values for constant values of DN and dN. A condition

restricts the vectors and couples its components. When we break this expression down to the components yj we get, due to the symmetry of ΣY-1, a term of the form

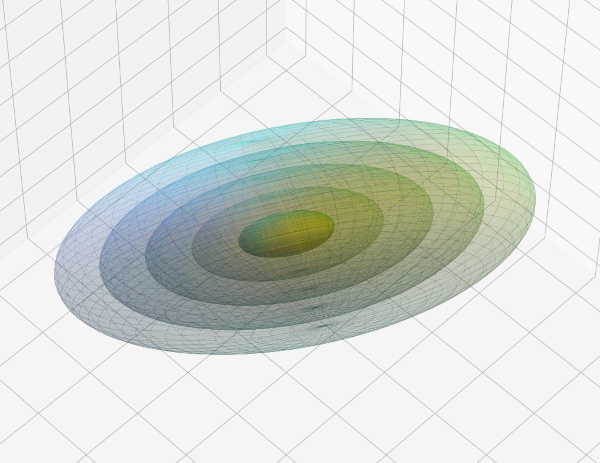

with the ξi,j being the coefficients of ΣY-1. This is obviously a quadratic form. Such a restrictive form for the components of vectors defines n-dimensional ellipsoids, more precisely the surface of ellipsoids. So, e.g. in 3 dimensions we would get hyper-surfaces of the following form for different growing (integer) values of C:

In other forthcoming posts we will see that the axes of these ellipsoids are rotated against the axes of the Euclidean Coordinate System for the ℝn.

Let us look at back to the vectors z of the Z-distribution from which we created our non-degenrate MVN YN : All the vectors z fulfilling e.g.

have a Mahalanobis distance of dZ = 1 from the origin of our ECS. There endpoints, therefore, reside on the surface of a unit sphere. For some constant value C = z • zT = const, the z-vectors have endpoints residing on the surfaces of other n-dimensional spheres with different radii. The fact that a linear transformation maps points on a coherent hyper-surface onto points of another coherent hyper-surface indicates that concentric contour-hypersurfaces of our basic Z-distribution are transformed into concentric ellipsoidal contour-hypersurfaces of Y.

We will prove that this is indeed true in the next post. The axes of the ellipsoid are rotated against the axes of the ECS and its midpoint is shifted by μy against the ECS origin.

Degeneration and its relation to the transformation “M” and the covariance matrix Σ

The reader may have thought about the word “non-degenerate“. We can understand this a bit better now. The first point is that the probability density function gy(y) only delivers reasonable values if the determinant of the covariance matrix det(ΣY) ≠ 0. For a quadratic symmetric real valued matrix this means invertibility and thus the existence of a Mahalanobis distance measure.

A so called degenerate distribution would be based on a non-quadratic or a non-invertible quadratic matrix M. ΣY then would still be symmetric, but not invertible. In these cases a probability function gy(y) is not defined and does not give us a density in the ℝn. Why?

The reason in case of a singular transformation matrix M is that the transformed distribution Y reduces to some lower dimensional sub-space of ℝn having a dimension m < n. We can conclude this from the fact that the eigenvectors of a non-invertible (symmetric) matrix ΣY are not independent of each other. They span a lower dimensional space. This is consistent to the evolution that the density function takes for matrices ΣY having determinants closer and closer to zero. In the limit process such a density would become infinitely big as the denominator for gy(y) indicates.

For non-quadratic matrices the image of the transformation matrix either has a lower dimensionality than the original vectors or the image has a lower dimensionality than the surrounding target space. We will come back in detail to such cases in forthcoming posts.

Thus a singular non-invertible or a non-quadratic transformation M would map a Z–distribution of vectors onto a lower-dimensional sub-space of the ℝn or a surrounding target space ℝm (with m > n). While we, for these cases, may not get a reasonably defined density in the target space of the transformation M, the resulting distribution may still have a distance measure and a density defined in a lower dimensional space. We will come back to this point in a later post.

Conclusion

In this post we followed our line of thought for non-degenerate MVNs YN which we have defined as the result of invertible linear transformations M applied to a very elementary MND Z ∼ Nn(0, I). Z was based on independent and standardized Gaussian probability densities for the component values of the respective continuously distributed vectors z around a mean vector 0. We found that the matrices Σ and Σ-1, which define the probability density for YN -distributions are symmetric, invertible and – consistently – positive-definite. We could use Σ-1 to define a distance between vectors of the distribution from its mean vector, the so called Mahalanobis distance. A constant value of this distance defines position vectors whose endpoints reside on the surfaces of multidimensional ellipsoids. We understood that neither the density of a target distribution YN created by singular or non-quadratic matrix M would be well-defined.

In the next post of this series

we will look at interesting de-compositions (= factorizations) of the covariance matrices of a non-degenerate MVNs and its inverse matrix. This will allow us to understand a non-degenerate MVN as the result of a sequence of special linear transformations.

Links / Literature

[1] “Positive Definite Matrix”, https://mathworld.wolfram.com/ Positive Definite Matrix.html

[2] Wikipedia article on the rank of matrices, https://en.wikipedia.org/ wiki/ Rank_%28 linear_ algebra%29