In the math section of this blog, we try to cover interesting aspects of Multivariate Normal Distributions [MVNs]. The topic of this post series is the covariance matrix of a MVN-like distribution confined inside a hyper-surface of constant probability density. Outside of the surface we set the probability density to zero. This gives us a “cut-off” MVN- distribution. Contour surfaces of a MVN in general enclose a central ellipsoidal volume in the MVN’s n-dimensional space. We have called such a volume a core.

We want to answer the following question: How does the covariance matrices of such a core and its cut-off MVN differ from the covariance matrix of the related standard MVN which stretches out to infinite distances in the n-dimensional space? In this post we are going to analyze the 2-dimensional problem for a cut-off Bivariate Normal Distribution [BVN] in detail. We will then use a respective sample of data points inside a finite Mahalanobis distance D to numerically test and verify theoretical predictions.

In a first step we will apply the results of the 2nd post in this series and evaluate required integrals in polar coordinates. The analytical result will confirm that the covariance matrix of the original MVN can be calculated by multiplying the core’s covariance matrix by a factor which is the same for all matrix elements. The core’s cov-matrix can be estimated from a numerical integration of respective sample data. We will, however, learn that we must apply an additional correction factor to compensate for a systematic difference still existing between our theoretical results and numerical integrations. This factor is given by a proper normalization of the cut-off distribution.

We use abbreviations and conventions defined in the 1st post of this series. Note that some authors use the abbreviations BVD, MVD instead of our acronyms BVN, MVN, respectively.

Previous posts

- Post1: Covariance matrix of a cut-off Multivariate Normal Distribution – I – intricate integrals with exponentials over the volumes and surfaces of n-dimensional ellipsoids?

- Post 2: Covariance matrix of a cut-off Multivariate Normal Distribution – II – integrals over volume and surface of an n-dimensional sphere

A two dimensional scenario

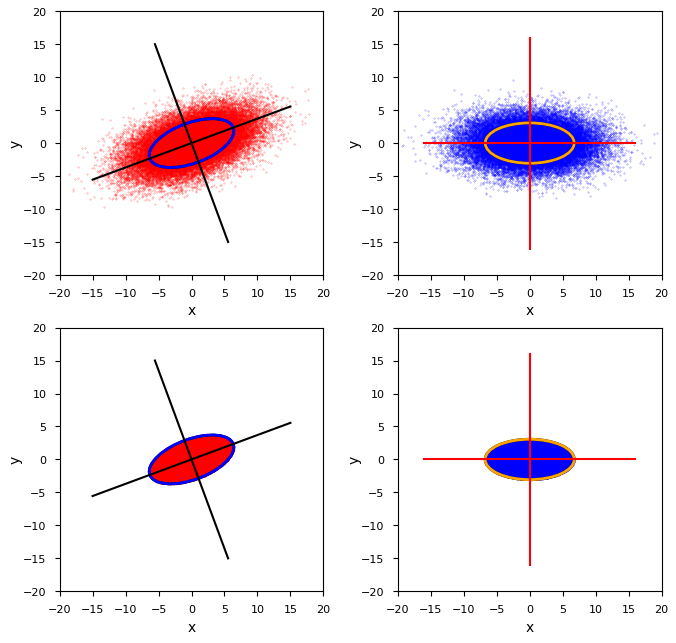

We reuse the 2-dim BVN-example already presented in the 1st post of this series. It was based on the following (2×2)-cov-matrix Σ2 :

We want to calculate the cov-matrix of the infinite distribution depicted in the upper left quadrant. The data available are, however, only data for the cut-off distribution depicted in the lower left corner. The value of the Mahalanobis distance dm defining the surface of the core is

Let us in a first step analyze the situation mathematically for a general D. We, of course, use results of post 2.

Integrals to solve for the n-dim case

The random vector YN of a MVN has a probability density g(y) controlled by a symmetric and invertible covariance matrix ΣY = CovY; see post 2. This density g(y) has contour surfaces surrounding the volume of an ellipsoid in the n-dimensional space of the MVN. In post 2, we have shown that we can nevertheless evaluate a volume integral over a spherical volume to get the covariance matrix CovC of the contents of a MVN’s ellipsoidal core:

The spherical volume is just a mapping of the original ellipsoidal core of Y mediated by the coordinate transformation [MY]-1 that maps a general YN to Z. Z is the random vector of a special MVN-distribution build from independent 1-dimensional Gaussian distributions. (The MVN Y can be generated from Z by MY.) The term in the square brackets actually gives us a matrix.

The integration (2) has to be performed over the volume of a n-dimensional sphere of radius r=D :

Note: I deviate a bit regarding dimensionality subscripts from other authors, who prefer to use (n-1) as a subscript of surfaces to indicate the lower dimensionality of the manifold in the ℝn.

MY is a linear (nxn)-transformation matrix which generates a general n-dim MVN of a random vector Y with correlations between its components (see the 2nd post for details):

CovY = ΣY is the symmetric and invertible covariance matrix of the MVN.

Two dimensions only …

For two dimensions, only, the exponential in integral (2) is the probability density of a special standardized BVN distribution for a respective random vector Z = Z2d = (Z1, Z2)T – with no correlation of its two marginals,

Z2d has a very simple probability density function, given at the end-point of a vector z2d = (z1, z2)T

We name the cov-matrix of a related 2-dim general BVN for a random vector Y2d, which we may have created from Z2 by an invertible (2×2) matrix M2 (see post 2 for details):

The integral becomes

The “volume” V2,Dsph of the spherical core (for dm=D) in (6) actually is the area of a circle. To make the notation look more familiar, we replace z1, z2 by x and y:

Our integral then gets

Integrals for the off-diagonal elements

Let us write down the central integrals for the off-diagonal elements of the matrix Cov2,C for the contents within our 2-dim core

Switching to polar coordinates (r, φ) we find

I.e. CovC in our 2-dim case becomes a diagonal matrix W2,diag :

Based on elementary symmetry arguments, we had already predicted this in post 2 – even for the general n-dimensional case.

Diagonal matrix elements

We can already conclude by symmetry reasons that Wxx = Wyy. But lets do it explicitly:

The integral of the cos2 can be looked up in tables or solved by substitution. Note that regarding the 2nd integral we changed from the integration variable r to the integration variable r2, which gave us an additional factor of 1/2 (due to d(r2) = 2rdr). Analogously,

We arrive at a first intermediate result, namely that the cov-matrices CovC and Σ2 differ by an element-independent factor W2

As predicted in post 2. But remember that we have ignored any re-normalization of the cut-off distribution, so far.

The integral over the radius

The remaining radial integral can be integrated by parts :

So, this is our (preliminary) prediction for the correction factor, which we should apply to the cov-matrix of a cut-off BVN’s core to get the cov-matrix of the original BVN. So, let us be brave and compare this with numerical results.

Numerical integration of our sample data for the core

Regarding the numerical evaluation of a given sample, our theoretical integration of the integrands with the probability density must of course be replaced by a corresponding summation over the data points and their x2-values. An approximation to the probability density will be achieved via a division by the number of the total number of elements in the core. There are multiple tools available, e.g. from Scikit or Numpy or SciPy, which provide you with numerical values of the core’s cov-matrix based on a sample of respective data points and their position vectors. What are the numerical steps? I describe them for Numpy’s function “cov()“.

- Step1: Create data of some MVN (e.g. in 4 dimensions). Perform a projection of the data down to coordinate planes (via slicing the data arrays) to get a 2-dim distribution of data points and related position vectors. Extract the elements of the respective matrix Σ2 form ΣY.

- Step 2: Create a reduced sample of data points by calculating each 2-dim vector’s Mahalanobis distance dm with the given matrix and throw away all points with dm > D.

- Step 3: For the remaining data of your core determine an estimate of its cov-matrix Cov2,C with the help of Numpys cov() function.

- Step 4: Multiply the resulting data with the theoretical estimation factor f2,D,pred = 1/W2.

The result of our theoretical estimation factor is (up to rounding):

The matrix resulting from Numpy’s cov() for our case with 40,000 points used during BVN-creation is

So, the prediction of values for the original matrix according to relation (16) would be

Which, unfortunately, is blatantly wrong!

Another correction factor from an integrated chi-squared distribution

Why did we get a wrong result? The reader has probably understood it, already: While our theoretical formulas (2), (9) and (11) are in principle correct, Numpy’s cov-function does something else than we did during our theoretical integration. Our theoretical derivation referred to a probability density which was normalized with respect to an integral of the probability density gY(y) over the infinite MVN being set to 1. See the (see the 2nd post, eq. (13)). This explains the normalization factor in front of the integrals (as e.g in eqs. (2) and (11)).

However, what Numpy’ cov and similar tools do is to normalize with respect to the number of available data points in the sample provided to them – i.e. with the number of data points in our elliptical (or spherical) core. Which in our case is only a fraction of the total number of points used to create a sample for the MVN.

In other words: So far, we have forgotten to properly normalize our cut-off distribution confined to the core having a zero probability density outside. The normalization factor is in the general case of n dimensions, of course, given by the integral

Regarding theory this means:

- Whenever we use numerically evaluated cov-matrices for a core region’s data sample as the base of an estimation, we must apply an additional estimation factor Fn,D which reflects the finite integral of the MVN’s probability density over the core’s region, only.

We will look at the integral Fn,D for the general case a bit closer in the next post of this series.

We know already that this is mapped to

for the 2-dim situation. We could solve this integral directly. We can, however, use a result which I have proven in another post of this blog; see [1]. There we integrated a BVN’s probability density over the area of an ellipse up to Mahalanobis distance dm=D and got for the respective probability P(dm≤D) of finding a data point within the region up to D:

This formula is just what we need to normalize our 2-dim cut-off distribution properly. We just have to multiply the standard probability density (6) by a factor 1/P(D). This leads to a modification of our formula for Σ2 from Cov2,C:

with

And regarding an estimated value Σ2,est based on a numerically derived Cov2,C,num of the cores covariance matrix:

Results

Application of the correct theoretical formula (25) gives for our 2-dim example and D=1.4:

These values are actually very close to the real values. The error is less than 0.2%. For D=1.8, we get

The results depend, of course, a bit on the statistical generation of the sample’s data points, but on average the error is less than 2%. I leave it to the reader to evaluate predictions from statistical samples for other values of D.

Numerical cross checks – and numerical integration of gZ(z) over the circle area

For those interested in numerical experiments some additional hints:

You can of course try to approximate the volume integral (8) of the integrands x2 * gZ(z) and/or y2 * gZ(z) over a spherical core numerically as an alternative way for getting an estimation factor. This requires to both rotate the sample’s vectors by angles given by the eigenvectors of Σ2 (see the plot at the top of this article), and, afterward, to standardize them by using values of the standard deviations σ1/2 . The latter are given by the eigenvalues λ1/2 of Σ2 as σ1/2 = 1/sqrt(λ1/2). In addition you may determine the fraction of points in the core in relation to the number of total points created for the MVD. This eventually provides a numerical derived factor C2,D,num for our estimation. Let us compare these numerical factors with the theoretical one, e.g. for D=1.4 and D=1.8:

I have averaged the numerical results for x2 * gZ(z) and y2 * gZ(z). These rather good results open up a way for a purely numerical approach for the estimation of a BVN’s cov-matrix from data points of a limited core.

Conclusion

We have evaluated the factors required for an estimation of the covariance matrix Σ2 of a 2-dimensional BVN from sample data of a respective cut-off distribution confined and limited to a core. The core was defined by a given Mahalanobis distance dm=D. In addition to the results of previous posts, we saw that we must take care of a proper normalization of the confined cut-off distribution. After we took this point into account we got a very good match of theoretical and numerical results for our sample of data points. We evaluated the core’s cov-matrix CovC ≈ CovC,num by numerical means and applied both theoretically and numerically derived estimation factors to get estimation values for the elements of Σ2 . The error was less than 1% for a case with around 25,000 data points inside a core defined by D=1.4.

In the next post of this series

we look a bit closer at the volume integrals governing the 3-dimensional case.

Links and literature

[1] R. Mönchmeyer, 2025, “Bivariate Normal Distribution – integrated probability up to a given Mahalanobis distance, the Chi-squared distribution and confidence ellipses”,

https://machine-learning.anracom.com/2025/07/30/bivariate-normal-distribution-integrated-probability-up-to-a-given-mahalanobis-distance-the-chi-squared-distribution-and-confidence-ellipses/